Project Overview

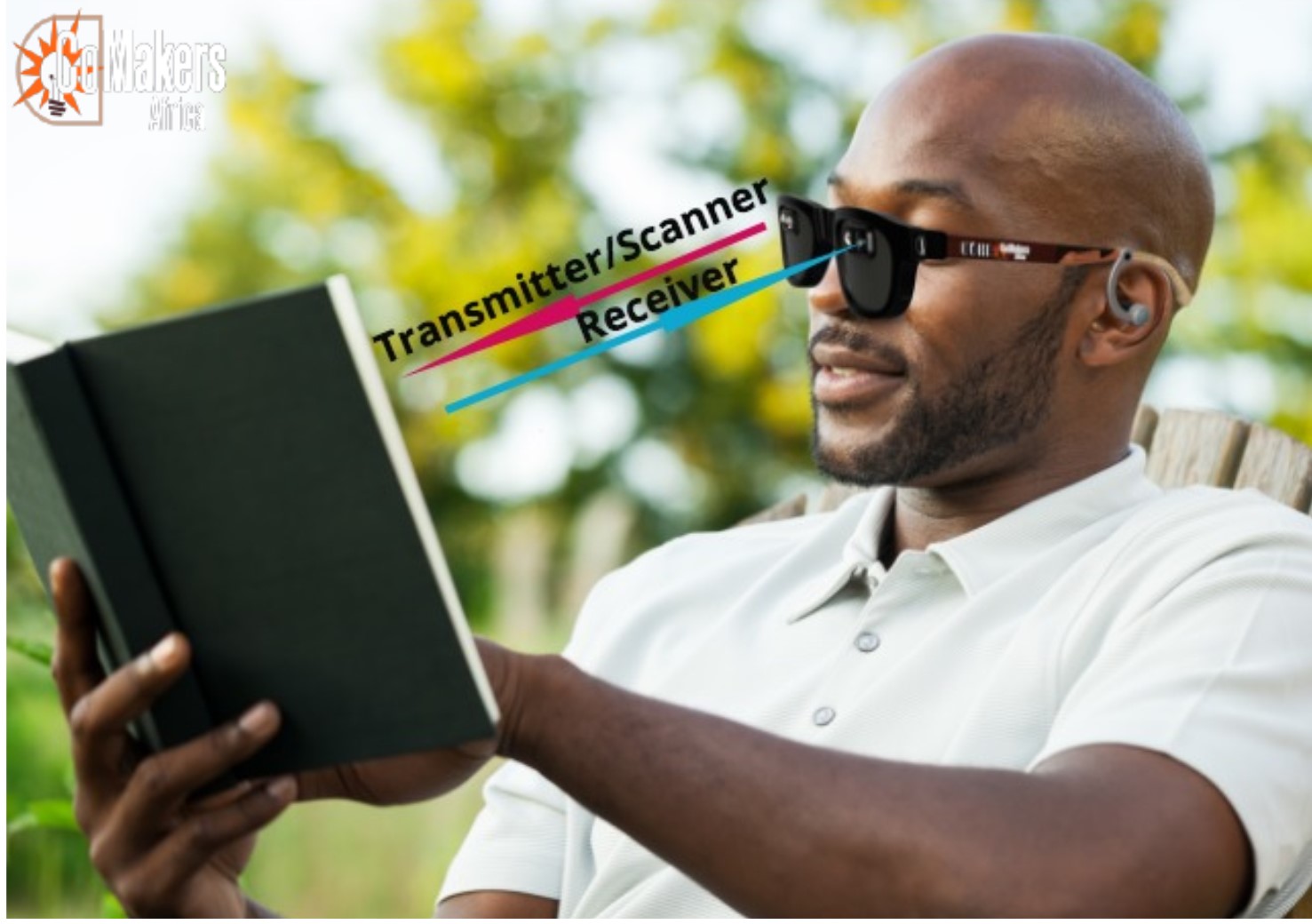

eBraille is a conceptual eye-glasses that enable visually impaired persons to read and navigate their surroundings without assistance. It is a real-time image analytic glass designed for the visually impaired.

This device is capable of analysing an image to get information from it in real time. eBraille can detect and extract text or handwritten letters and digits in notes, newspapers, signboards, marker boards or any other source using Optical Character Recognition (OCR) and converts this extracted text to speech.

The device also detects objects, products, and persons from images and communicates this information via speech to the user. The software (Image analytics) runs on-premise and doesn't require an internet connection, eliminating delays from cloud connectivity issues.

Design Thinking and Methodology

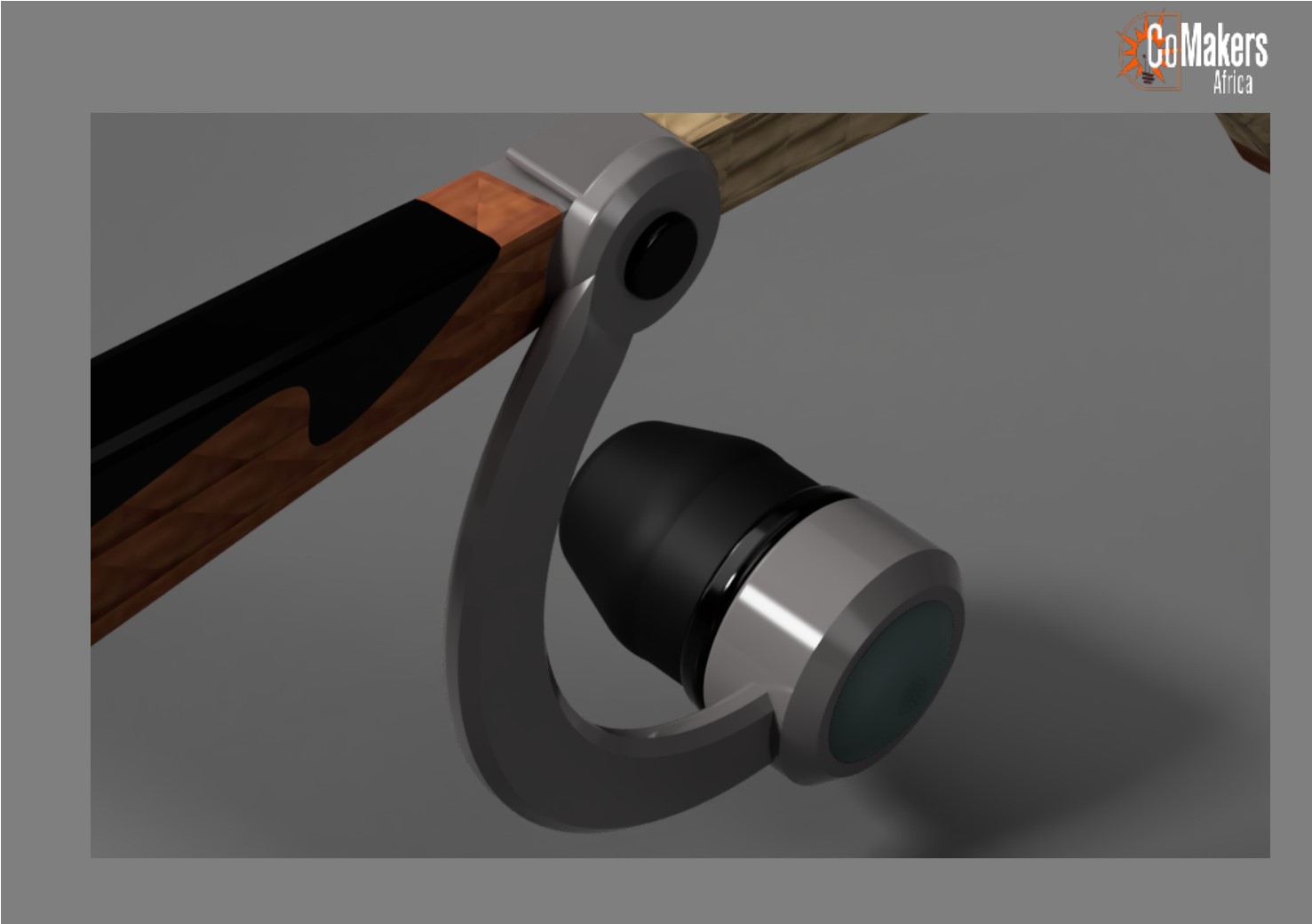

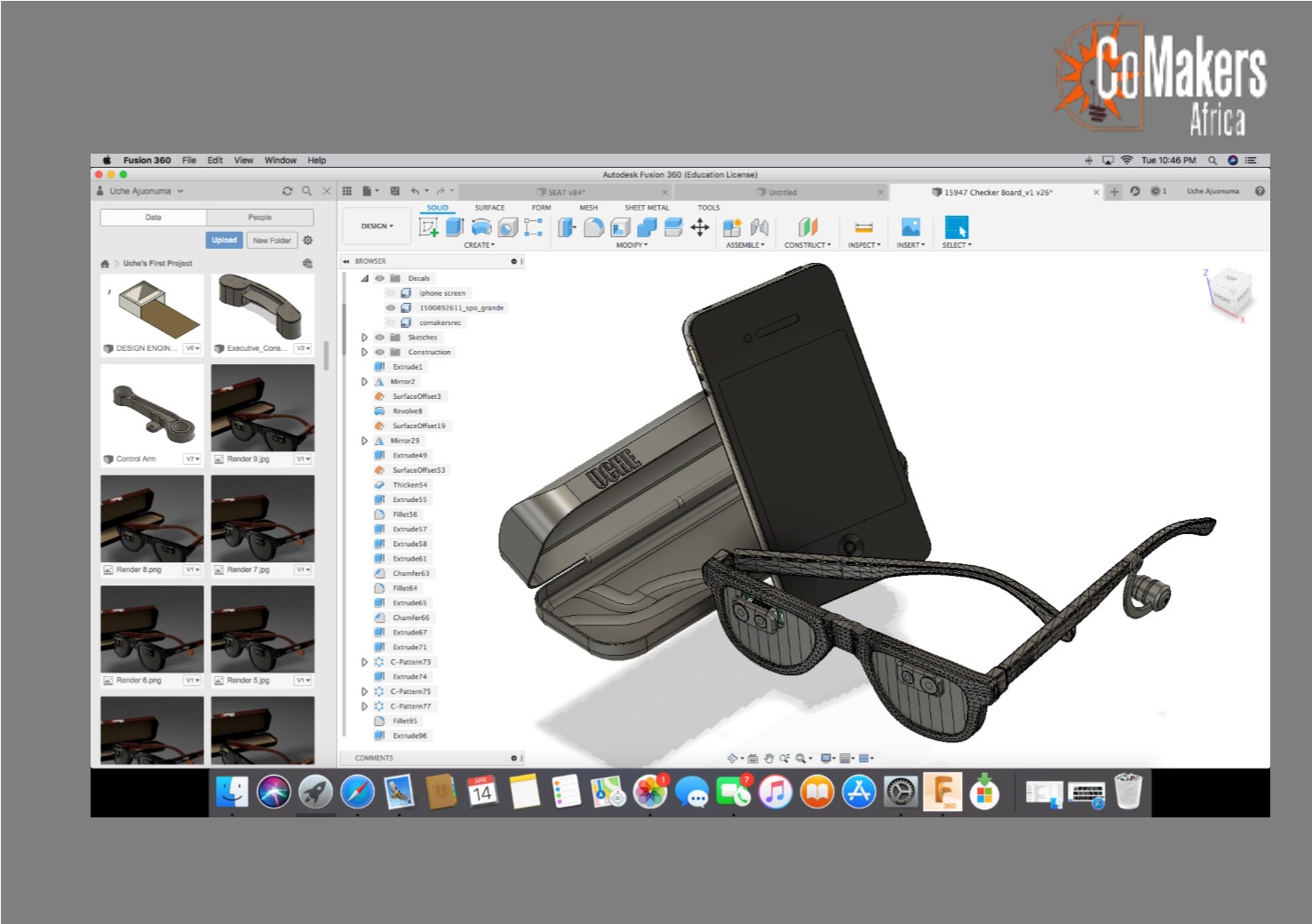

The idea for eBraille was born during a hardware hackathon I chaired in 2018. The hardware consists of a powerful processor capable of running image processing algorithms, four high-resolution cameras, a rechargeable battery, all embedded in stylish eyeglasses, and a Bluetooth earpiece for speech communication.

Key Considerations

- Size of the device: Designed to be as compact as possible to avoid unnecessary load on the user.

- Cost: Developed with rural areas in mind, reducing internet dependency for affordability while offering premium features for those who can afford it.

- User Friendliness: Minimized buttons and icons in the software for intuitive operation.

Screenshot of the completed 3D modeled eBraille before rendering